BlinkAI is a Boston-based startup aiming to transform how camera sensors work through the use of machine learning. Below is our recent interview with Selina Shen, CEO of BlinkAI Technologies:

Q: Could you provide our readers with a brief introduction to BlinkAI Technologies?

A: We’ve created a machine learning model that allows cameras to essentially “see” like humans do by learning about the objects within its frame the same way a human child learns to see. Our AI-powered software for camera sensors are used across the automotive, mobile, and security industries.

Q: Why do we need to enhance camera imagery in general? Are our current cameras not good enough in low-light scenarios?

A: Within low-light scenarios, there is a lot of thermal noise that corrupts the signal. Think of how the pictures you’ve taken at night on your cell phone are pixelated. This noisy signal masks the information you need to know and see. Yet this is something that our human perception can do. In fact, the human visual system does this all the time as input comes into our eyes. We can see much better in the dark vs. the camera in your hand. And that is what we’re doing.

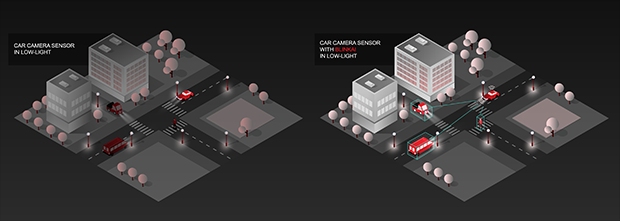

Our technology is able to understand the noise on the cameras that corrupts the information to better illuminate these difficult low-light scenarios in real-time.

Q: You’ve recently launched RoadSight to enhance visible-light cameras in autonomous vehicles; could you tell us something more? Why is your technology needed within the automotive market specifically?

A: RoadSight advances beyond the conventional Image Signal Processor (ISP) pipeline and utilizes patented machine learning algorithms and computer vision to achieve 500% higher scene illumination. This dramatically improves object detection and other computer vision tasks in demanding low-light driving scenarios.

This means camera sensors are far more likely to detect pedestrians and vehicles through front-facing, visible-light cameras in self-driving cars as well as advanced driver-assistance systems (ADAS) in traditional cars. We’re helping these systems to better see in areas where the headlight illumination cuts off and there are no streetlights or ambient light.

Q: How does your technology compared to other AI technology being used by sensors within the autonomous vehicle industry?

A: Our AI software can actually be used alongside other AI technology that is already being used in 0ther self-driving systems. BlinkAI can better illuminate the inputs coming into the camera sensors so the other AI technology onboard can better identify what objects are.

Q: What’s the best thing about BlinkAI that people might not know about?

A: This technology we are bringing to market was actually spun out of the Martinos Center for Biomedical Imaging, which is affiliated with Massachusetts General Hospital. My co-founder Bo Zhu led a team developing the use of deep learning for image reconstruction of medical images there. They were looking at how you extract the most information out of MRI scans based on how fast you need the data.

However, he saw huge opportunities and challenges in nonmedical areas. Attuned to how important cameras are in all areas of our lives, and the areas where this technology could really be a life-safer in other ways, we’ve been focusing on bringing the technology to market within the automotive market, as well as, the security market to improve safety.

Recommended: SafeHouse Web: Boosting Engagement & Profits With Effective Digital Marketing

Recommended: SafeHouse Web: Boosting Engagement & Profits With Effective Digital Marketing

Q: What are your plans for the future?

A: BlinkAI is currently collaborating with top automotive manufacturers and automotive technology suppliers to trial the functionality of its technology in both autonomous and traditional vehicles within poor lighting and bad weather environments.

We’re currently improving the detection of pedestrians and vehicles through front-facing, visible-light cameras, but we’re also looking at improving interior cabin monitoring and better illuminates the side and rear cameras during nighttime or early morning parking scenarios.

In the future, our technology could also be used for improving visibility and eliminating weather elements such as rain, snow, fog, etc from camera sensor inputs to improve driving safety.

Recommended:

Recommended: